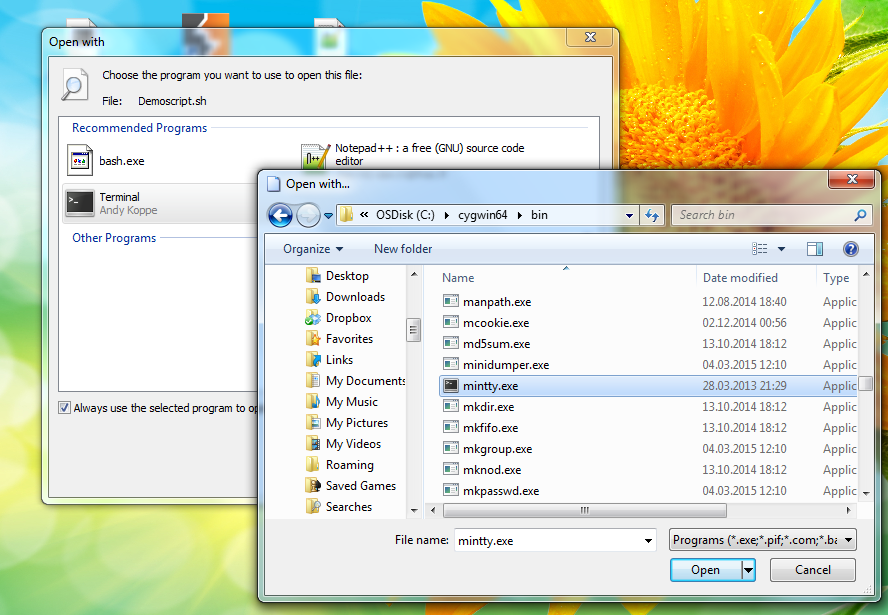

Cygwin is a great tool to run Linux application on Windows. I primarly use it to run my already written shell scripts. What I always missed was the ability to launch shell scripts with a simple double click. However this is very easy to setup. Just right click on a .sh file and select “Open with…”. Now browse to “C:\cygwin(64)\bin” and select mintty.exe. Don’t forget to check the checkbox at the bottom of the dialog to always use the selected application. From now on just double click any .sh file and it gets executed automatically.

With my new job as IT security engineer in mind I now surf the web with completely different eyes. Thereby I already stumbled across some more or less severe security issues. I reported all of them to the responsible companies, however they handled them in completely different ways. Some companies simply ignored me while others invited me to visit their office for a chat and a coffee. This blog post is not about the technical details of any of these issues but about the risk the person takes that reports a security problem.

With my new job as IT security engineer in mind I now surf the web with completely different eyes. Thereby I already stumbled across some more or less severe security issues. I reported all of them to the responsible companies, however they handled them in completely different ways. Some companies simply ignored me while others invited me to visit their office for a chat and a coffee. This blog post is not about the technical details of any of these issues but about the risk the person takes that reports a security problem.

A good bug report nails down one specific problem by documenting a way on how to trigger it. For example if you find a bug that allows you to buy stuff for free the corresponding bug report must contain all the necessary steps to do so. However that is exactly the problem.

Think about the following scenario: You, as a company, receive a bug report that documents a SQL injection vulnerability and you decide to fix it soon (tm). A few weeks later your website is defaced by someone exploiting this specific vulnerability. Who do you think of first as possible suspect? Furthermore to document a problem in detail (and basically help to fix it more quickly) a security researcher has to perform several requests to the vulnerable part of the application. All of this “break-in attempts” are recorded in the log files of the webserver even strengthening the already existing evidence. Even if the researched documented everthing and never exploited the issue to gain any advantage this may now cause trouble for him. In the best case it will be just wasted time or a nice chat with a police officer.

The easiest way to minimize this risk is to openly communicate with the company responsible for the application. Thereby the researcher provides all information necessary to reproduce the bug directly to the responsible person(s). However that is only possible if the company is interesting in getting the issue resolved. Another way to protect himself might be to simply escalate the problem to the press. After being ignore for an extended period of time (several weeks not days!) this might be your only possiblity to finally get the issue noticed by the developers.

With this blog post I hope to raise the awarness for security vulnerabilities in a sense that they are not only a problem for the company responsible for the particular application but maybe also for the person that discovered and reported the issue. If you receive your next bug report please take the time to evaluate it in detail and please response in a timely manner. It’s also in your interest to talk to the person that discovered and reported it.

Almost six years ago – on the 9th of March 2009 – I started my successful career at ToolsAtWork and ToolsOnAir. During that time I worked on many great things including but not limited to projects related to planing, installing and managing OS X, Windows and Linux servers, developing software in many different languages and recently I mostly designed and implemented large video storage and archiving systems all around the world. I learned a lot of new stuff not only on the technical side but also on how to plan, manage and implement new workflows and solutions. Most importantly, I meet very cool and inspiring people from all over the world working in many different industries. However, now it’s time for change!

Starting with March 2015 I will change my professional focus on IT Security and I will join the Security Audit & Assessment Team at Kapsch BusinessCom AG. With this job change I will also change the focus of this blog to something more security related.

I want to thank all my former colleagues at ToolsAtWork and ToolsOnAir, all our partners, the customers I worked with and all my readers here for their support and input. It was great fun working with you and I wish you all the best. I would love to hear from you in the comments or connect with you on either LinkedIn or Xing. Of course you are all invited to keep reading this blog to join me on my new journey.

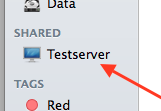

For me and many of my customers it would be a great feature to have Bonjour working over VPN connections. Apple’s Bonjour (also called mDNS or multicast DNS) is the service responsible for discovering other machines and the services provided by these machines in your network. The most important feature for me is the fileserver integration in Finder as shown on the right. Thereby all detected fileservers are integrated into the left Finder sidebar and you can simply connect by clicking them. Unfortunately this does not work over VPN connections as multicast traffic is generally not routed.

For me and many of my customers it would be a great feature to have Bonjour working over VPN connections. Apple’s Bonjour (also called mDNS or multicast DNS) is the service responsible for discovering other machines and the services provided by these machines in your network. The most important feature for me is the fileserver integration in Finder as shown on the right. Thereby all detected fileservers are integrated into the left Finder sidebar and you can simply connect by clicking them. Unfortunately this does not work over VPN connections as multicast traffic is generally not routed.

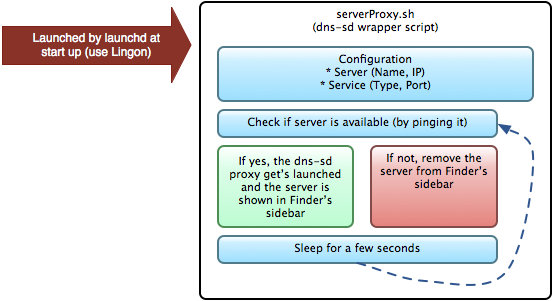

To simulate this fileserver discovery over a VPN connection I wrote a small wrapper script for dns-sd. It checks if a given server is available by pinging it’s IP and if so it adds it to the sidebar by using dns-sd’s proxy feature. You can check out the script at my Google Code snippet repository. The following diagram shows the inner workings.

To install it just download serverProxy.sh, rename it to myServerProxy.sh to allow multiple proxies, make it executable, adapt the settings at the top and create a launchd configuration. I recommend to use Lingon to create a “My Agent” launchd job that gets loaded at startup and that keeps the script alive. In theory it should not crash but who knows. You can use as many proxies as you like. Finally reboot and check if the configured server is now shown after you connected to your VPN.

Just a quick Linux hint today. If you put an unsupported SFP+ module into an Intel Corporation 82599EB 10-Gigabit SFI/SFP+ card the corresponding network interface (pXpY or ethX) is removed from the system. The following messages is logged in “/var/log/messages”:

Nov 19 11:07:03 hostname kernel: ixgbe 0000:0f:00.0: failed to load because an unsupported SFP+ module type was detected.

To restore the interface you have to remove and reload the card’s kernel driver. To do so run the following commands as root. Please note that all other ports using this driver are also temporary unavailable during that time. That means that active transmissions will probably fail.

rmmod ixgbe; modprobe ixgbe

Red Hat features a similar issue in their knowledgebase article #275333.

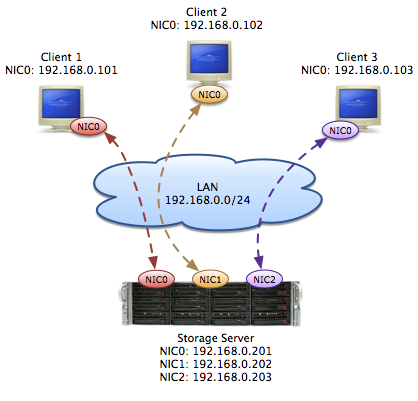

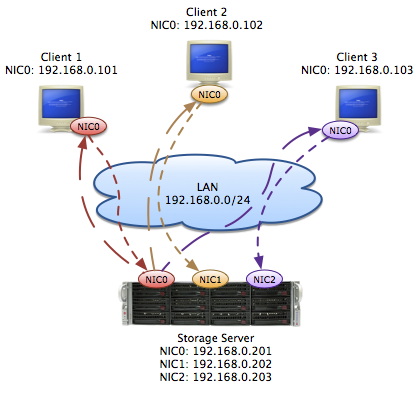

At a recent Linux video storage installation my colleague Georg and I encountered really strange problems that we had never seen before. Our initial goal was to connect five Windows 7 machines running Avid Media Composer 7 to separate NICs on the new storage system. As it was impossible to install new cables we simply added a second IP address to the LAN connection to ensure correct traffic routing and to fulfil the bandwidth requirements. What we did not know was that this change caused the NetBIOS name resolution and discovery to fail – a not that unusual issue. For me this would have been just a minor annoyance however the client had several workflows that depended on it. Hence we had to revert everything and find another workaround.

In the end we agreed to simply connect all NICs to the client’s unmanaged core switch and assigned a dedicated IP to each of them. The image below gives a simplified network and traffic overview. We believed to have solved the NetBIOS issue while still being able to guarantee the bandwidth. If only we had known!

The first issue we encountered was that we were unable to connect to the internet but only from the new Linux system. We did not investigate this further as the client reported several other issues with their firewall configuration. We proceeded with our performance test that yielded miserable results!

After several hours we finally found out that the Windows clients correctly sent their traffic to their designated NIC on the central Linux storage however the corresponding replies were all sent from one single NIC limiting the total read performance to the bandwidth of this single link. The picture below illustrates the traffic flow we observed.

Given this, we found several articles, like “Routing packets back from incoming interface“, describing this behaviour. Linux, by design, considers packages individually for routing purposes. This means that the routing decision is only based on the package itself and not if it’s a response of some sort.

This also explained why we were not able to connect to the internet. Although we had only one interface with a default gateway configured, the entries in the routing table were not correct. What the means is, we sent the Ethernet frame over say NIC0 with the source IP address of NIC1 to the standard gateway. The firewall on it however detected that the MAC of the incoming frame did not match the MAC of NIC1 and thereby suspected an ARP spoofing attack. Logically it was blocked!

Luckily we can use additional custom routing tables to build a workaround. Novell’s KB entry “Reply packets are sent over an unexpected interface” explains in detail how to do it. With that help we could solve our issue and now all NICs get utilized as expected. As we did not know about this behaviour before it was a quite unexpected for us. However from a developer’s point of view this design makes absolute sense.

Currently there are several articles like the one on Arstechnica that complain about the DNS resolver in OS X 10.10. Amongst others they report issues with name resolution per se and Bonjour machine names that get changed. Many of this posts then suggest to replace the new discoveryd with the legacy mDNSResponder service.

Currently there are several articles like the one on Arstechnica that complain about the DNS resolver in OS X 10.10. Amongst others they report issues with name resolution per se and Bonjour machine names that get changed. Many of this posts then suggest to replace the new discoveryd with the legacy mDNSResponder service.

This post acts as a warning: Never ever replace core system components!

By following the instructions to replace discoveryd you are completely on your own. By replacing such a vital system component you can introduce all kinds of bugs. Many of those may not even look related to name resolution but are triggered by some strange side effect. Furthermore I’m pretty sure Apple does not test their updates with this Frankenstein-like system configuration. Last but not least you may even introduce security problems.

Even if there are bugs, which is inevitable, please report them to the developers and wait for a system update to fix them. You simply don’t know what problems you cause by doing otherwise.

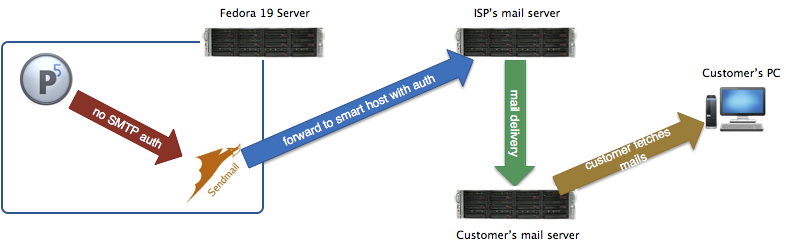

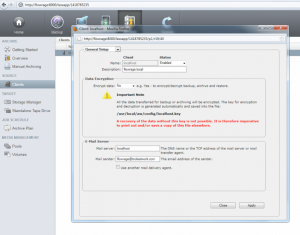

If you are responsible for backup or archiving systems you know for sure how important it is to get system notifications. You want to know if a job failed! My backup/archiving software of choice Archiware P5 however does not yet support SMTP user authentication or TLS and so we have to build a workaround to support most generally available mail servers.

In this post I will explain how to install sendmail on Fedora 19 and how to configure it to use a smart host. I opted for sendmail as I already used it before and mostly know how to configure it. Furthermore I will also show what to change in P5’s interface. The image below illustrated the desired resulting mail delivery path.

The following steps should work on any Red Hat-based distribution without further changes and should be easily adaptable to other Linux-based operating systems. Many commands in this post are based on the one’s used in my previous article “UNIX & PHP: Configure sendmail to forward mails“.

Install Software

The first thing we have to do is to install the required software. It’s very important to also also add the sendmail-cf configuration files package.

yum install sendmail sendmail-cf

Mail Credentials

In the next step we have to append the mail server credentials to “/etc/mail/authinfo”.

AuthInfo:your_mail_server.your_domain.tld "U:your_username" "P:your_password"

Update Configuration

After that we have to update the “/etc/mail/sendmail.mc” sample configuration.

At first we have to do is to append the smart host configuration to the end of the file.

FEATURE(`authinfo',`hash /etc/mail/authinfo') define(`SMART_HOST', `your_mail_server.your_domain.tld')

After that uncomment the following line by removing the dnl prefix:

define(`confAUTH_MECHANISMS', `EXTERNAL GSSAPI DIGEST-MD5 CRAM-MD5 LOGIN PLAIN')dnl

Select MTA

To ensure that we really use sendmail as MTA we have to choose “/usr/sbin/sendmail.sendmail” after running the following alternatives command.

alternatives --config mta

Apply Configuration

To apply all our changes run the following commands:

cd /etc/mail/ makemap hash authinfo < authinfo m4 sendmail.mc >sendmail.cf service sendmail restart

We now have a fully functional SMTP server running on our local machine that forwards all incoming mails to the configured smart host.

Archiware P5 Configuration

To finish the configuration we have to ensure that Archiware P5 uses the correct mail server and sender address. To do so log into the P5 interface and open the localhost Client preferences as shown on the screenshot below. Then enter “localhost” as Mail server. Furthermore provide a valid Mail sender address.

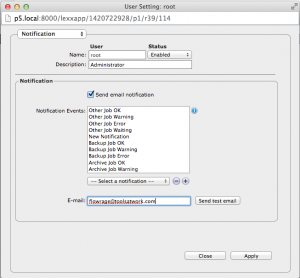

Finally, within the Notification section in the User Settings provide the user’s email address and select the desired notifications for the user. By hitting the “Send test mail” button you are able to test your configuration. Don’t forget to customise the list of events that should trigger a notification.

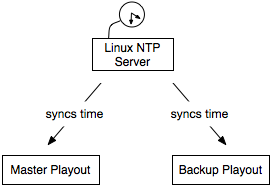

This year’s last post is all about time. In fact, it’s about how to get NTP working within an isolated network. The Network Time Protocol is a network protocol used to synchronise computer clocks across networks. It is necessary as computers measure time using oscillating crystals. However each computer has a slightly different oscillating interval that causes the local clocks of different systems to drift apart. This can cause problems in distributed systems.

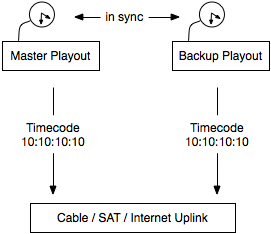

One such problem occurs within redundant playout systems with multiple servers. All systems need to have exactly the same time reference (and to do so they often use the local system time) to play the same video frame all the time. Otherwise there is a visible service disruption during fallback.

As this example shows it’s more about a coherent time source / reference than it is about a correct one. What that means is that it is more important that all systems have exactly the same time, however it does not really matter if it’s 0,5 seconds ahead the correct one.

To do so I always use one Linux system within the isolated broadcast network as NTP server using ntp. This server gets queried by all other systems and shares his local time.

There is only a small problem with this setup. As the time source for the Linux NTP Server is only his oscillating crystal and not a precise system like an atomic clock the other systems don’t trust his information. His strata is simply too high. There are two solutions to solve this issue:

- You operate the server using the Undisciplined Local Clock

- or you use Orphan mode

Whichever you use, you will get a coherent time reference on all nodes within the network. However be aware that it’s just a relative time.

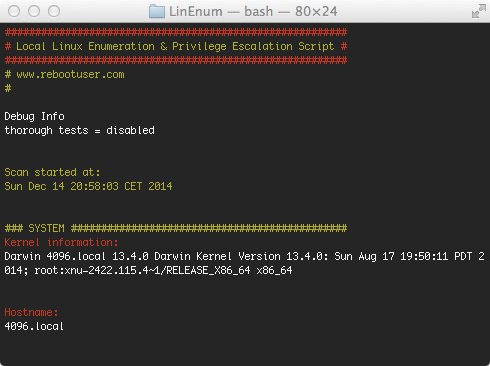

A few weeks ago I learned about LinEnum. It’s original author owen described it as follows:

It’s a very basic shell script that performs over 65 checks, getting anything from kernel information to locating possible escalation points such as potentially useful SUID/GUID files and Sudo/rhost mis-configurations and more.

The first thing that came to my mind was if this script will work on OS X. I cloned the GitHub repository to my Mac and was immediately greeted with multiple error messages. As I had some spare minutes I forked the repository and fixed the most major bugs.

As I had to disable some tests I hope to find some more time to fix and reenable them. My goal is to maintain the Linux compatibility and only extend the script to fully work on OS X. I think this could become be a handy quick-check tool.